How to Merge Data Using Change Data Capture in Databricks

.png?width=600&name=How%20to%20Merge%20Data%20Using%20Change%20Data%20Capture%20in%20Databricks%20(1).png)

My post today in our Azure Every Day Databricks mini-series is about Databricks Change Data Capture (CDC). A common use case for Change Data Capture is for customers looking to perform CDC from one or many sources into a set of Databricks Delta tables. The goal here is to merge these changes into Databricks Delta.

For example, let’s say we have a file that comes in on Monday and we ingest that data into a table. A new file comes in on Tuesday and we want to merge the inserts, updates and deletes. In my video below I’ll demo how to do this and to process data using Databricks and Change Data Capture.

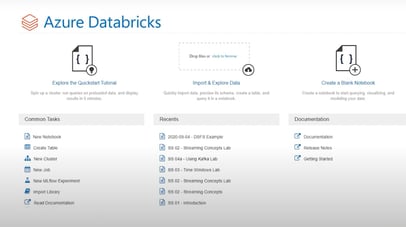

- I begin with a previously created Databricks cluster launched and running. Within the data, I have a file that I ingested called customer 1 CSV.

- I want to import another table which is called customer 2 CSV. I bring this in and on the Create New Table screen, I click on Create Table with UI and select my cluster.

- Next, click on the Preview Table button where we can name the table and then Create Table. This will ingest that file and we now have it available for use within our notebook.

- Click on the Change Data Capture notebook and first thing to do is to drop tables if they already exist, so we don’t get errors further downstream.

- Now we want to interrogate our customer 1 CSV file which has 91 rows. If we interrogate our second table (2 CSV), it has 99 rows; an addition of 8 rows so we’ll want to insert those and there could be possible changes to the existing data.

- The next query we’ll run will the be counts. You’ll see the 91 and the 99, so eight additional rows and possible updates. We see that one record changed, the contact name is different between each file, thus we have an update. So, this is a good use case example that we will see further downstream.

- Next, we’ll create a Delta table based on these fields. We’ll insert a U (hard coded) to identify those as updates and inserts will be identified with “I”. (See my video for more detail on code and queries used.)

- We can select the same row and see the sales representative’s name. If we exclude that statement and rerun, we’ll see the 91 rows, showing that this is the first ingestion.

- What I want to do is merge those two datasets. We started with 91, then we had 99 and now I’m going to compress the data and the records that already existed will be updated.

- When I run my Delta table, it will return 99 rows and if we interrogate, we’ll see a flag field was added. We’ll see a bunch of “U” lines and some “I” lines, approximately 8, which are the new records.

- Further downstream, we’ll run another query which shows the 11 rows, which are the Deltas or the inserts and updates. We had 8 inserts and 3 updates, one being the record we showed earlier with the contact name.

- We can then query to describe the table and it will show you the iterations. The version zero was the first file with 91 records, version 1 is actually the second file with 99 records. This has some useful information that you can interrogate as well. It has a list of what it did, in other words, you can see the first data set ingested 91 rows and it also shows the action performed by the second data set.

- Lastly, I select that specific record I showed earlier from the Delta table and I can see that the name is different on the current record, so this is an update from what was on the first record from the 91 row file with what was in the second 99 row file.

In doing this, you can see how easy it is to process changes over time using the Delta method within Azure Databricks. You can also click here to learn more in this Databricks blog. If you have questions or want to discuss leveraging Databricks, the Power Platform or Azure in general, we are the people to talk to. Our team of experts are here to help—give us a call or click the link below to learn more.

Sign-up now and get instant access

ABOUT THE AUTHOR

Free Trial

On-demand learning

Most Recent

private training

-1.png)

Leave a comment